When testing Salesforce, there is often a desire to test the view(s) of a workflow as different users. A common strategy for this is to add automation on the UI, using a functional automation tool such as Selenium.

Depending on the number of profiles in your Salesforce organization, this is a very time consuming and brittle process — it entails running the same workflow for users of a unique profile, while checking both Read, and Write accessibility for many field elements (this is also dependent on the page layout).

Taking this route, we may run the risk of inverting our test pyramid. What we can do to remedy this issue is fairly simple since we know profile configuration is accessible from XML and also using SOQL to query object permissions. So this begs the question, “How can we structure a test to verify field permission accessibility for a given profile”?

1) Overall test case (visit this link to understand Salesforce unit testing)

@isTest

public class ContactObjectTest {

static testMethod void testContactReadWriteFieldsSystemAdministratorProfile() {

runProfileTest('Contact', 'System Administrator', getContactSystemAdministratorFields());

}

}

2) Flesh out the generator

@isTest

public class ContactObjectTest {

static String writeFieldName = 'PermissionsEdit';

/**

object = Contact

profile = System Administrator

**/

private static void runProfileTest(String objectName, String profile, Map<String, Map<String, Boolean>> expectedPerms) {

Boolean success = true;

try

{

List perms = [SELECT Id, Field, SObjectType, PermissionsRead, PermissionsEdit

FROM fieldPermissions

WHERE SObjectType = :objectName

AND parentId in ( SELECT id

FROM permissionSet

WHERE PermissionSet.Profile.Name = :profile)];

Set nonExpectedFieldsFound = new Set();

// Go through actual perms and make sure they exist if expected

for(FieldPermissions perm : perms) {

try {

Map<String, Boolean> expectedPerm = expectedPerms.get(perm.Field);

System.assertEquals(expectedPerm.get(writeFieldName), perm.PermissionsEdit,

'Permission named ' + perm.Field + ' is ' + perm.PermissionsEdit + ' but expected ' + expectedPerm.get(writeFieldName)

);

} catch (NullPointerException e) {

nonExpectedFieldsFound.add(perm.Field);

System.debug('Found a field that was not in expected permissions: ' + perm.Field);

success = false;

}

}

System.assertEquals(0, nonExpectedFieldsFound.size(), 'Found Read only fields in ' + objectName + ' for ' +

'profile -- ' + profile + ' -- that were not in expected set: ' + nonExpectedFieldsFound);

}

catch (Exception e)

{

System.debug('Failed profile field test ' + e.getMessage());

success = false;

}

finally

{

System.assert(success);

}

}

}

3) Add the test specific expected field accessibility map (createPerm, getContactSystemAdministratorFields methods)

@isTest

public class ContactObjectTest {

static String writeFieldName = 'PermissionsEdit';

/**

object = Contact

profile = System Administrator

**/

private static void runProfileTest(String objectName, String profile, Map<String, Map<String, Boolean>> expectedPerms) {

Boolean success = true;

try

{

List perms = [SELECT Id, Field, SObjectType, PermissionsRead, PermissionsEdit

FROM fieldPermissions

WHERE SObjectType = :objectName

AND parentId in ( SELECT id

FROM permissionSet

WHERE PermissionSet.Profile.Name = :profile)];

Set nonExpectedFieldsFound = new Set();

// Go through actual perms and make sure they exist if expected

for(FieldPermissions perm : perms) {

try {

Map<String, Boolean> expectedPerm = expectedPerms.get(perm.Field);

System.assertEquals(expectedPerm.get(writeFieldName), perm.PermissionsEdit,

'Permission named ' + perm.Field + ' is ' + perm.PermissionsEdit + ' but expected ' + expectedPerm.get(writeFieldName)

);

} catch (NullPointerException e) {

nonExpectedFieldsFound.add(perm.Field);

System.debug('Found a field that was not in expected permissions: ' + perm.Field);

success = false;

}

}

System.assertEquals(0, nonExpectedFieldsFound.size(), 'Found Read only fields in ' + objectName + ' for ' +

'profile -- ' + profile + ' -- that were not in expected set: ' + nonExpectedFieldsFound);

}

catch (Exception e)

{

System.debug('Failed profile field test ' + e.getMessage());

success = false;

}

finally

{

System.assert(success);

}

}

static Map<String, Boolean> createPerm(String writeName, Boolean value) {

Map<String, Boolean> perm = new Map<String, Boolean>();

perm.put(writeName, value);

return perm;

}

/****************** PROFILE FIELD ACCESS TESTS *****************/

static Map<String, Map<String, Boolean>> getContactSystemAdministratorFields() {

Map<String, Map<String, Boolean>> ContactFields = new Map<String, Map<String, Boolean>>();

ContactFields.put('Contact.Title', createPerm(writeFieldName, True));

ContactFields.put('Contact.ReportsTo', createPerm(writeFieldName, True));

ContactFields.put('Contact.Phone', createPerm(writeFieldName, True));

ContactFields.put('Contact.OtherPhone', createPerm(writeFieldName, True));

ContactFields.put('Contact.OtherAddress', createPerm(writeFieldName, True));

ContactFields.put('Contact.MobilePhone', createPerm(writeFieldName, True));

ContactFields.put('Contact.MailingAddress', createPerm(writeFieldName, True));

ContactFields.put('Contact.LeadSource', createPerm(writeFieldName, True));

ContactFields.put('Contact.Jigsaw', createPerm(writeFieldName, True));

ContactFields.put('Contact.HomePhone', createPerm(writeFieldName, True));

ContactFields.put('Contact.HasOptedOutOfFax', createPerm(writeFieldName, True));

ContactFields.put('Contact.HasOptedOutOfEmail', createPerm(writeFieldName, True));

ContactFields.put('Contact.Fax', createPerm(writeFieldName, True));

ContactFields.put('Contact.Email', createPerm(writeFieldName, True));

ContactFields.put('Contact.DoNotCall', createPerm(writeFieldName, True));

ContactFields.put('Contact.Description', createPerm(writeFieldName, True));

ContactFields.put('Contact.Department', createPerm(writeFieldName, True));

ContactFields.put('Contact.Birthdate', createPerm(writeFieldName, True));

ContactFields.put('Contact.AssistantPhone', createPerm(writeFieldName, True));

ContactFields.put('Contact.AssistantName', createPerm(writeFieldName, True));

ContactFields.put('Contact.Account', createPerm(writeFieldName, True));

ContactFields.put('Contact.Time_Zone__c', createPerm(writeFieldName, True));

ContactFields.put('Contact.Suffix__c', createPerm(writeFieldName, True));

ContactFields.put('Contact.Seasonal_Only__c', createPerm(writeFieldName, True));

ContactFields.put('Contact.Salutation__c', createPerm(writeFieldName, True));

ContactFields.put('Contact.SMSEnabled__c', createPerm(writeFieldName, True));

ContactFields.put('Contact.Rehire_Location__c', createPerm(writeFieldName, True));

ContactFields.put('Contact.Rehire_Eligibility_Status__c', createPerm(writeFieldName, True));

ContactFields.put('Contact.Previously_Used_Full_Name__c', createPerm(writeFieldName, True));

ContactFields.put('Contact.Preferred_Phone_Number__c', createPerm(writeFieldName, True));

ContactFields.put('Contact.Portal_User__c', createPerm(writeFieldName, True));

ContactFields.put('Contact.Portal_User_Link__c', createPerm(writeFieldName, False));

ContactFields.put('Contact.Override_Flag__c', createPerm(writeFieldName, True));

ContactFields.put('Contact.Mobile_Phone__c', createPerm(writeFieldName, True));

ContactFields.put('Contact.Mobile_Phone_Country_Code__c', createPerm(writeFieldName, True));

ContactFields.put('Contact.Middle_Name__c', createPerm(writeFieldName, True));

ContactFields.put('Contact.Last_Name__c', createPerm(writeFieldName, True));

ContactFields.put('Contact.Language__c', createPerm(writeFieldName, True));

ContactFields.put('Contact.Internal_External__c', createPerm(writeFieldName, True));

ContactFields.put('Contact.Internal_Email__c', createPerm(writeFieldName, True));

ContactFields.put('Contact.Internal_Candidate__c', createPerm(writeFieldName, True));

ContactFields.put('Contact.Home_Phone__c', createPerm(writeFieldName, True));

ContactFields.put('Contact.Home_Phone_Country_Code__c', createPerm(writeFieldName, True));

ContactFields.put('Contact.First_Name__c', createPerm(writeFieldName, True));

ContactFields.put('Contact.External_Email__c', createPerm(writeFieldName, True));

ContactFields.put('Contact.Employee_ID__c', createPerm(writeFieldName, True));

ContactFields.put('Contact.EMPL_Rcd_No__c', createPerm(writeFieldName, True));

ContactFields.put('Contact.Current_Mailing_Adddress__c', createPerm(writeFieldName, True));

ContactFields.put('Contact.Country_Code_PS__c', createPerm(writeFieldName, True));

ContactFields.put('Contact.Contact_Profile_Submitted__c', createPerm(writeFieldName, True));

ContactFields.put('Contact.Candidate_ID__c', createPerm(writeFieldName, True));

ContactFields.put('Contact.Agency_Name__c', createPerm(writeFieldName, True));

ContactFields.put('Contact.Address_Line_2__c', createPerm(writeFieldName, True));

return ContactFields;

}

static testMethod void testContactReadWriteFieldsSystemAdministratorProfile() {

runProfileTest('Contact', 'System Administrator', getContactSystemAdministratorFields());

}

}

Now that we have an idea on how we’ve created our unit test to verify field permissions under the System Administrator profile, extending the test to add other profiles is as simple as adding the testMethod, along with getObjectProfileFields map. Since this is also reusable for objects (Account, Contact, etc) we can create a generator that cranks out tests for a given Object and the desired Profiles.

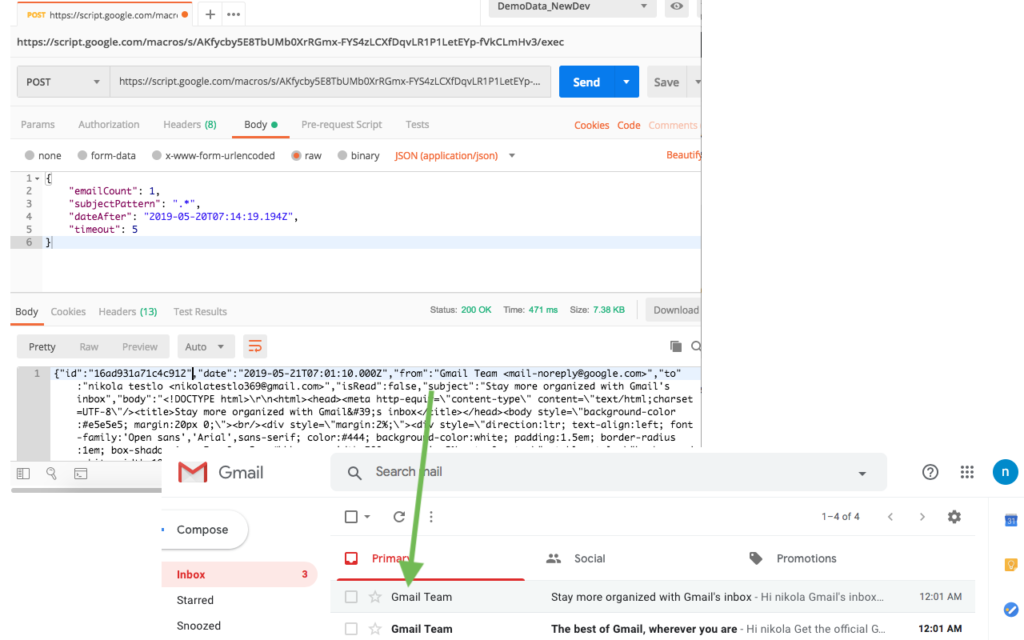

Here’s a script to do so in Python 3.7.2. There are 4 required parameters, access token, instance url, profiles (comma seperated), and object. You can save this as generateProfileUnitTestsFromSoql.py

#!/bin/python

"""

> python.exe generateProfileUnitTestsFromSoql.py

-t '00D110000001O34!ARIAQLW5MsJqTVUbwgl13xDW_UGvZBG5GEJC.4bxsuzWc.ehOrnuRhT.MtMSrb0wCP07wfc71C6gEOnsSP0CknZnPdkzDUnc'

-u 'https://customDomain.my.salesforce.com'

-p 'System Administrator,Alternative-System Administrator,Standard User'

-o Contact

"""

import argparse

import requests

import re

parser = argparse.ArgumentParser()

parser.add_argument("--authtoken", "-t", type=str, required=True)

parser.add_argument("--instanceurl", "-u", type=str, required=True)

parser.add_argument("--sobject", "-o", type=str, required=True)

parser.add_argument("--profilenames", "-p", type=str, required=True)

args = parser.parse_args()

sobject = args.sobject

token = args.authtoken

instanceUrl = args.instanceurl

profileNames = args.profilenames.split(',')

filetemplatePre = """

@isTest

public class ContactObjectTest {{

static String writeFieldName = 'PermissionsEdit';

/**

object = Contact

profile = System Administrator

**/

private static void runProfileTest(String objectName, String profile, Map<String, Map<String, Boolean>> expectedPerms) {{

Boolean success = true;

try

{{

List perms = [SELECT Id, Field, SObjectType, PermissionsRead, PermissionsEdit

FROM fieldPermissions

WHERE SObjectType = :objectName

AND parentId in ( SELECT id

FROM permissionSet

WHERE PermissionSet.Profile.Name = :profile)];

Set nonExpectedFieldsFound = new Set();

// Go through actual perms and make sure they exist if expected

for(FieldPermissions perm : perms) {{

try {{

Map<String, Boolean> expectedPerm = expectedPerms.get(perm.Field);

System.assertEquals(expectedPerm.get(writeFieldName), perm.PermissionsEdit,

'Permission named ' + perm.Field + ' is ' + perm.PermissionsEdit + ' but expected ' + expectedPerm.get(writeFieldName)

);

}} catch (NullPointerException e) {{

nonExpectedFieldsFound.add(perm.Field);

System.debug('Found a field that was not in expected permissions: ' + perm.Field);

success = false;

}}

}}

System.assertEquals(0, nonExpectedFieldsFound.size(), 'Found Read only fields in ' + objectName + ' for ' +

'profile -- ' + profile + ' -- that were not in expected set: ' + nonExpectedFieldsFound);

}}

catch (Exception e)

{{

System.debug('Failed profile field test ' + e.getMessage());

success = false;

}}

finally

{{

System.assert(success);

}}

}}

static Map<String, Boolean> createPerm(String writeName, Boolean value) {{

Map<String, Boolean> perm = new Map<String, Boolean>();

perm.put(writeName, value);

return perm;

}}

/****************** PROFILE FIELD ACCESS TESTS *****************/

{tests}

}}

"""

fileTemplateInsertTest = """

static testMethod void test{sobject}ReadWriteFields{profileFormatted}Profile() {{

runProfileTest('{sobject}', '{profile}', {expectedFieldsMethod}());

}}

"""

fileTemplateInsertExpectedFeilds = """

static Map<String, Map<String, Boolean>> get{sobject}{profileFormatted}Fields() {{

Map<String, Map<String, Boolean>> {sobject}Fields = new Map<String, Map<String, Boolean>>();

{insertExpectedFeild}

return {sobject}Fields;

}}

"""

fileTemplateInsertExpectedFeild = """

{sobject}Fields.put('{fieldName}', createPerm(writeFieldName, {editFieldAccess}));"""

testFile = ''

tests = ''

for profileName in profileNames:

response = requests.get(instanceUrl + "/services/data/v44.0/query?q="

"SELECT Id, Field, SObjectType, PermissionsRead, PermissionsEdit FROM fieldPermissions "

"WHERE SObjectType = '" + sobject + "' AND parentId in "

"( SELECT id FROM permissionSet WHERE PermissionSet.Profile.Name = '" + profileName + "')",

headers={'Authorization': 'Bearer ' + token})

expectedFeild=''

for record in response.json()['records']:

# Get field

fieldName = record['Field']

editable = record['PermissionsEdit']

# readable = record['PermissionsRead']

expectedFeild+=fileTemplateInsertExpectedFeild.format(sobject=sobject,

fieldName=fieldName,

editFieldAccess=editable)

profileFormatted=re.sub('[^a-zA-Z]+', '', profileName)

insertExpectedFields=fileTemplateInsertExpectedFeilds.format(sobject=sobject,

profileFormatted=profileFormatted,

insertExpectedFeild=expectedFeild)

insertTest=fileTemplateInsertTest.format(sobject=sobject,

profileFormatted=profileFormatted,

profile=profileName,

expectedFieldsMethod='get' + sobject + profileFormatted + 'Fields')

tests+=insertExpectedFields

tests+=insertTest

testFile = filetemplatePre.format(tests=tests)

f = open('generateProfileUnitTests.cls', 'w')

f.write(testFile)

f.close